Effective Altruism's Implicit Epistemology

Cross-posted to the EA Forum

The future might be very big, and we might be able to do a lot, right now, to shape it.

You might have heard of a community of people who take this idea pretty seriously — effective altruists, or ‘EAs’. If you first heard of EA a few years ago, and haven’t really followed it since, then you might be pretty surprised at where we’ve ended up.

Currently, EA consists of a variety of professional organizations, research institutes, and grantmaking bodies, all with (sometimes subtly) different approaches to doing the most good possible. Organizations which collectively donate billions of dollars towards interventions aiming to improve the welfare of conscious beings. In recent years, the EA community has shifted its priorities towards an idea called longtermism — very roughly, the idea that we should primarily focus our altruistic efforts towards shaping the very long-run future. Like, very long-run. At least thousands of years. Maybe more.

(From hereon, I’ll use ‘EA’ to talk primarily about longtermist EA. Hopefully this won’t annoy too many people).

Anyway, longtermist ideas have pushed EA to focus on a few key cause areas — in particular, ensuring that the development of advanced AI is safe, preventing the development of deliberately engineered pandemics, and (of course) promoting effective altruism itself. I've been part of this community for a while, and I've often found outsiders bemused by some of our main priorities. This is despite the fact (longtermist) EA’s more explicit commitments, as many philosophers involved with the movement remind us, are really not all that controversial. And those philosophers are right, I think. Will MacAskill, for instance, has listed the following three claims as part of the basic, core commitments of longtermism.

(1) Future people matter morally.

(2) There could be enormous numbers of future people.

(3) We can make a difference to the world they inhabit.

Together, these three claims all seem pretty reasonable. With that in mind, we’re left with a puzzle: given that EA’s explicitly stated core commitments are not that weird, why, to many people, do EA’s explicit, practical priorities appear so weird?

In short, my answer to this puzzle claims EA’s priorities emerge, to a large extent, from EA’s unusual epistemic culture. So, in this essay, I’ll attempt to highlight the sociologically distinctive norms EAs adopt, in practice, concerning how to reason, and how to prioritize under uncertainty. I’ll then claim that various informal norms, beyond some of the more explicit philosophical theories which inspire those norms, play a key role in driving EA’s prioritization decisions.

2. Numbers, Numbers, Numbers

Suppose I asked you, right now, to give me a probability that Earth will experience an alien invasion within the next two weeks.

You might just ignore me. But suppose you‘ve come across me at a party; the friend you arrived with is conspicuously absent. You look around, and, well, the other conversations aren’t any better. Also, you notice that the guy you fancy is in the corner; every so often, you catch him shyly glancing at us. Fine, you think, I could be crazy, but talking to me could get you a good conversation opener for later. You might need it. The guy you like seems pretty shy, after all.

So, you decide that you’re in this conversation, at least for now. You respond by telling me that, while you’re not sure about exact probabilities, it’s definitely not going to happen. You pause, waiting for my response, with the faint hope that I’ll provide the springboard for a funny anecdote you can share later on.

“Okay, sure, it almost certainly won’t happen. But how sure are you, exactly? For starters, it’s clearly less likely than me winning the lottery. So we know it’s under 1/10^6. I think I’ve probably got more chance at winning two distinct lotteries in my lifetime, at least if I play every week, so let’s change our lower bound to … ” — as I’m talking, you politely excuse yourself. You’ve been to EA parties before, enough to know that this conversation isn’t worth it. You spend your time in the bathroom searching for articles on how to approach beautiful, cripplingly shy men.

Spend enough time around EAs, and you may come to notice that many EAs have a peculiar penchant for sharing probabilities about all sorts of events, both professionally and socially. Probabilities, for example, of the likelihood of smarter-than-human machines by 2040 (in light of recent developments, Ajeya Cotra’s current best guess is around 50%), the probability of permanent human technological stagnation (Will MacAskill says around 1 in 3), or the chance of human extinction (Toby Ord’s book, The Precipice, ends up at about 1 in 6).

As you leave the party, you start to wonder why EAs tend to be beset by this particular quirk.

2.1

In brief, the inspiration for this practice stems from a philosophical idea, Subjective Bayesianism — which theorizes how ideally rational agents behave when faced with uncertainty.

Canonically, one way of setting up the argument for Subjective Bayesianism goes something like this: suppose that you care about some outcomes more than others, and you’re also (to varying degrees) uncertain about the likelihood of all sorts of outcomes (such as, for example, whether it’s going to rain tomorrow). And suppose this uncertainty feeds into action in a certain way: other things equal, your actions are influenced more by outcomes which you judge to be more likely.

Given all this, there are arguments showing that there must be some way of representing your uncertainty with a probability function — that is, for any given state of the world (like, for example, whether it’s raining), there’s some number between 0 and 1 which represents your uncertainty about that state of the world, called your ‘credence’, and your credences have to obey certain rules (for instance, your credence in ‘rain or not rain’ has to sum to 1).

Why must these rules be obeyed? Well, if those rules aren’t obeyed, then there are situations where someone who has no more information than you can offer you a series of bets, which — given how likely you think those outcomes are — appear to be good deals. Nevertheless, taking this series of bets is guaranteed to lose you money. This type of argument is called a ‘money pump’ argument, because someone who (again) has no more knowledge than you do, has a procedure for “pumping money” from you indefinitely, or at least until you reach insolvency.

Now, we know that if your uncertainty can’t be represented with a probability function, then you can be money pumped. There are proofs of this. Guaranteed losses are bad, thus, so the argument goes, you should behave so that your uncertainty can be represented with a probability function.1

We should also mention that there’s a formula which tells you how to update probabilities upon learning new information — Bayes’ rule. Hence, the ‘Bayesian’ part of Subjective Bayesianism. You have probabilities, which represent your subjective states of uncertainty. As your uncertainty is modeled with a probability function, you update your uncertainty in the standard way you’d update probabilities.

Do you actually need to be forming credences in practice? Well, maybe not, we’ll get to that. But humans can be pretty bad at reasoning with probabilities in various psychological experiments, and we know that people can become better calibrated when prompted to think in more explicitly probabilistic ways — Philip Tetlock’s work on superforecasting has illustrated this. So there is something motivating this sort of practice.

2.2

Suppose, after leaving the EA party, you begin to reconsider your initial disdain. You are uncertain about lots of things, and you definitely don’t want to be money-pumped. Also, you like the idea of helping people. You start to consider more stuff, even some quite weird stuff, and maybe now you even have your very own credences. But you were initially drawn to EA because you wanted to actually help people — so, now what? What should you actually do?

When deciding how to act, EAs are inspired by another theoretical ideal — Expected Value Theory.

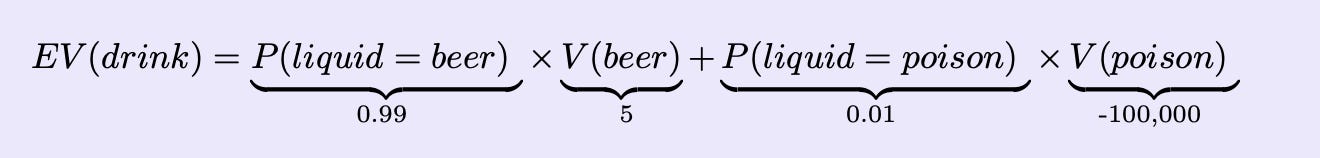

80,000 Hours introduce this idea nicely. They ask you to suppose that there’s (what looks to be) a tasty glass of beer in front of you. Unfortunately, the beer is unwisely produced, let’s say, in a factory that also manufactures similarly coloured poison. Out of every 100 glasses of the mystery liquid, one will be a glass of poison. You know nothing else about the factory. Should you drink the mystery liquid?

Well, like, probably not. Although drinking the liquid will almost certainly be positive, “the badness of drinking poison far outweighs the goodness of getting a free beer”, as 80,000 Hours’ president Ben Todd sagely informs us.

This sort of principle generalizes to other situations, too. When you’re thinking about what to do, you shouldn’t only think about what is likely to happen. Instead, you should think about which action has the highest expected value. That is, you take the probability that your action will produce a given outcome, and multiply that probability by the value you assign to that outcome, were it to happen. Then, you add up all of the probability-weighted values. We can see how this works with the toy example below, using some made up numbers for valuations.

We can treat the valuations of ‘beer’ and ‘poison’ as departures from my zero baseline, where I don’t drink the liquid. So, in this case, as the expected value of the drink is -995.05 (which is less than zero), you shouldn’t drink the (probable) beer.

Now, if you care about the very long-run future, calculating the effects of everything you do millions of years from now, with corresponding probabilities, would be … pretty demanding. For the most part, EAs recognise this demandingness. Certainly, professional organizations directing money and talent recognise this; while it sometimes makes sense to explicitly reason in terms of expected value, it often doesn’t. 80,000 Hours state this explicitly, mentioning the importance of certain proxies for evaluating problems — like their ‘importance, neglectedness, and tractability’ (INT). These proxies are, in general, endorsed by EAs. Open Philanthropy, a large grantmaking organization with a dedicated longtermist team, also reference these proxies as important for their grantmaking.

Still, even when using proxies like INT, 80,000 Hours caution against taking the estimates literally. In my experience interacting with people at big EA organizations, this view is pretty common. I don’t remember encountering anyone taking the output of any one quantitative model completely literally. Instead, a lot of intuition and informal qualitative reasoning is involved in driving conclusions about prioritization. However, through understanding the formal frameworks just outlined, we’re now in a better position to understand the ways in which EA’s epistemology goes beyond these frameworks.

And we’ll set the scene, initially, with the help of a metaphor.

3. Uncertainty and the Crazy Train

Ajeya Cotra works for Open Philanthropy, where she wrote a gargantuan report on AI timelines, estimating the arrival of smarter-than-human artificial systems.

Alongside an estimate for the advent of AI with power to take control over the future of human civilisation, the report acts as a handy reference point for answers to questions like “how much computational power, measured in FLOPS, are the population of nematode worms using every second?”, and “how much can we infer about the willingness of national governments to spend money on future AI projects, given the fraction of GDP they’ve previously spent on wars?”

But perhaps Ajeya’s most impressive accomplishment is coining the phrase “the train to Crazy Town”, in reference to the growing weirdness of EA.

The thought goes something like this: one day, people realized that the world contains a lot of bad stuff, and that it would be good to do things to alleviate that bad stuff, and create more good stuff. Thus the birth of GiveWell, which ranked charities in terms of amount of good done per $. Malaria nets are good, and the Against Malaria Foundation is really effective at distributing malaria nets to the most at-risk. Deworming pills also look good, potentially, though there’s been some brouhaha about that. You’re uncertain, but you’re making progress. You start researching questions in global health and well-being, looking for ever-better ways to improve the lives of the world’s poorest.

Still, you’re trying to do the most good you can do, which is a pretty lofty goal. Or, the most good you can do in expectation, because you’re uncertain about a lot of things. So you begin considering all of the ways in which you’re uncertain, and it leads you to weird places. For one, you start to realize there could be quite a lot of people in the future. Like, a lot a lot. In Greaves’ and MacAskill’s paper defending longtermism, they claim that “any reasonable estimate of the expected number of future beings is at least 10^24” — that’s 10, followed by 24 zeros.

10,000,000,000,000,000,000,000,000 people. At least.2

Anyway, each of these 10^24 people has hopes and dreams, no less important than our hopes, or the hopes and dreams of our loved ones and families. And you care about these potential people. You want to be sure that you’re actually making the world, overall, better — as much as you possibly can.

After spending some time reflecting on just how bad COVID was, you decide to shift to biosecurity. No one engineered COVID to be bad, and yet, still, it was pretty bad, which leads you to wonder how much worse a deliberately engineered pandemic could be. Initially, a piece of theoretical reasoning gets you worried: you learn that the space of all possible DNA sequences is far, far larger than the number of DNA sequences generated by evolution. If someone wanted to engineer a pandemic with far casualty rates then, well, they very likely could. You imagine various motives for doing this, and they don’t seem all that implausible. Perhaps a small group of bioterrorists become convinced humanity is a stain on the planet, and set to destroy it; or perhaps states respond to military conflict with a pandemic attack so deadly and viral that it expands globally, before we have time to develop effective prevention.

But then you begin to descend the rabbit hole further. You encounter various cosmological theories, suggesting that our universe could be spatially infinite, with a potentially infinite number of sentient beings.

Of course, you initially think that we couldn’t affect the well-being of infinitely many people — almost all of these people live outside our lightcone, after all. But some decision theories seem to imply that we could “acausally” affect their well-being, at least in an infinite universe. And lots of smart people endorse these decision theories. You remember to update your credences in response to expert disagreement. You’re neither a cosmologist nor a decision theorist, after all, and these theories have non-trivial acceptance among experts in these fields.3

You remember expected value theory. You think about the vastness of infinity, desperately trying to comprehend just how important it would be to affect the happiness, or relieve the misery of infinitely many people. These people matter, you remind yourself. And if you could do something, somehow, to increase the chance that you do an infinitely large amount of good, then, well, that starts to seem worth it. You push through on the project. Some time passes. But, as you’re double-checking your calculations about the best way to acausally affect the welfare of infinitely many aliens, you realize that you’ve ended up somewhere you didn’t quite expect.

The story started with something simple: we wanted to do the most good we could do. And we wanted to check that our calculations were robust to important ways we might be wrong about the world. No one thought that this would be easy, and we knew that we might end up in an unexpected place. But we didn’t expect to end up here. “Something’s off”, you think. You’re not sure what exactly went wrong, but you start to think that this is all seeming just a bit too mad. The Crazy Train, thankfully, has a return route. You hop back on the train, and pivot back to biosecurity.

3.1

So, what point are we meant to draw from that story? Well, I don’t want to claim that following through on expected value reasoning is necessarily going to lead you to the particular concerns I mentioned. Indeed, Ajeya tells her story slightly differently, with a slightly different focus. In this interview, Ajeya discusses the role that reasoning about the simulation argument, along with various theories of anthropic reasoning, played in her decision to alight the crazy train at the particular stop she did.

Still, I think the story I told captures, at a schematic level, a broader pattern within the practical epistemology of EA. It’s a pattern where EAs start with something common-sense, or close, and follow certain lines of reasoning, inspired by Subjective Bayesianism and Expected Value Theory, to increasingly weird conclusions. Then, at some point, EAs lose confidence in their ability to adequately perform this sort of explicit probabilistic reasoning. They begin to trust their explicit reasoning less, and fall back on more informal heuristics. Heuristics like “no, sorry, this is just too wacky”.

The practical epistemology of EA is clearly influenced by both Expected Value Theory and Subjective Bayesianism. But I think the inspiration EAs take from these theories has more in common with the way, say, painters draw on prior artistic movements, than it does to a community of morally motivated acolytes, fiercely committed to following the output of certain highly formalized, practical procedures for ranking priorities.

Take Picasso. In later periods, he saw something appealing in the surrealist movement, and so he (ahem) drew from surrealism, not as a set of explicit commitments, but as embodying a certain kind of appealing vision. A fuzzy, incomplete vision he wanted to incorporate into his own work. I think the formal theories we’ve outlined, for EAs, play a similar role. These frameworks offer us accounts of ideal epistemology, and ideal rationality. But, at least for creatures like us, such frameworks radically underdetermine the specific procedures we should be adopting in practice. So, in EA, we take pieces from these frameworks. We see something appealing in their vision. But we approximate them in incomplete, underdetermined, and idiosyncratic ways.

In the remainder of this essay, I want to get more explicit about the ways in which EAs take inspiration from, and approximate these frameworks.

4. Four Implicit Norms of EA Epistemology

Cast your mind back to the parable we started with in Section 2 — the one at the party, discussing aliens. Most people don’t engage in that sort of conversation. EAs tend to. Why?

I think one reason is that, for a lot of people, there are certain questions which feel so speculative, and so unmoored from empirical data, that we’re unable to say anything meaningful at all about them. Maybe such people could provide credences for various crazy questions, if you pushed them. But they’d probably believe that any credence they offered would be so arbitrary, and so whimsical, that there would just be no point. At some point, the credences we offer just cease to represent anything meaningful. EAs tend to think differently. They’re more likely to believe the following claim.

Principle 1: If it’s worth doing, it’s worth doing with made up numbers.

This principle, like the ones which follow it, is (regrettably) vague. Whether something is worth doing, for starters, is a matter of degree, as is the extent to which a number is ‘made up’.

A bit more specifically, we can say that EAs believe, to a much larger extent than otherwise similar populations (like scientifically informed policymakers, laypeople, or academics) that if you want highlight the importance of (say) climate change, or the socialist revolution, or housing policy, then you really should start trying to be quantitative about just how uncertain you are about the various claims which undergird your theory of why this area is important. And you should really try, if at all possible, to represent your uncertainty in the form of explicit probabilities. If it’s worth doing, it’s worth doing with explicit reasoning, and quantitative representations of uncertainty.

You can see this principle being applied in Joe Carlsmith’s report on the probability of existential risk from advanced AI, where the probability of existential risk from “misaligned, power-seeking” advanced AI systems is broken down into several, slightly fuzzy, hard-to-evaluate claims, each of which are assigned credences. In this report, Carlsmith explicitly (though cautiously) extols the benefits of using explicit probabilities, as they allow for greater transparency. Similar reasoning is apparent, too, in Cotra’s report on AI timelines. Drawing on her estimates for the amount of computation required for various biological processes, Cotra presents various probability distributions for the amount of computational power that you’d need to combine with today’s ideas in order to develop an AI with greater-than-human cognitive capabilities. Even in less formal reports, the use of quantitative representations of uncertainty remains present — 80,000 Hours’ report on the importance of ‘GCBRs’ (‘global catastrophic biological risks’), for example, provides various numerical estimates representing reported levels of likelihood for different kinds of GCBRs.

If you read critics of (longtermist) EA, a lot of them really don’t like the use of “made up numbers”. Nathan Robinson criticizes EA’s focus on long-term AI risks, as the case for this focus relies on “made-up meaningless probabilities.” Boaz Barak claims that, for certain claims about the far-future, we can only make vague, qualitative judgements, such as “extremely likely”, or “possible”, or “can’t be ruled out”. These criticisms, I think, reflect adherence to an implicit (though I think fairly widespread) view of probabilities, where we should only assign explicit probabilities when our evidence is relatively robust.4 EAs, by contrast, tend to be more liberal in the sort of claims for which they think it’s appropriate (or helpful) to assign explicit probabilities.

Now, most EAs don’t believe that we should be doing explicit expected value calculations all the time, or that we should only be relying on quantitative modeling. Instead, Principle 1 says something like “if we think something could be important, a key part of the process for figuring that out involves numeric estimates of scope and uncertainty — even if, in the end, we don’t take such estimates totally literally”.

Crucially, the adoption of this informal norm is inspired by, but not directly derived from, the more explicit philosophical arguments for Subjective Bayesianism. The philosophical arguments, by themselves, don’t tell you when (or whether) it’s appropriate to assign explicit credences in practice. An appropriate defense (or criticism) of EA practice would have to rely on a different kind of argument. But, before we defend (or criticize) this norm, we should first state the norm explicitly, as a distinctive feature of EA’s epistemic culture.

4.1

When introducing the Crazy Train, we saw that EAs do, in fact, exhibit some normal amount of normal suspicion towards the conclusions from very speculative or abstract arguments. But one thing that’s unusual about EAs is the fact that these considerations were salient considerations at all. The simulation hypothesis, or theories of acausal reasoning are, for most people, never likely to arise. Certain types of claims are not only beyond the bounds of meaningful probability assignments, they’re beyond the bounds of worthwhile discussion at all.

Principle 2: Speculative reasoning is worth doing.

I’ve claimed that EAs are more likely to believe that if something is “worth doing”, then “it’s worth doing with made up numbers”. Speculative reasoning is worth doing. Thus, in EA , we observe people offering credences for various speculative claims, which other groups are more likely to filter out early on, perhaps on account of sounding too speculative or sci-fi.

What makes a claim speculative? Well, I think it’s got something to do with a claim requiring a lot of theoretical reasoning that extends beyond our direct, observational evidence. If I provide you with an estimate of how many aliens there are in our galaxy, that claim is more speculative than a claim about (say) the number of shops on some random main street in Minnesota. I don’t know the exact number, in either case. But my theories about “how many shops do there tend to be in small US towns” is more directly grounded in observational data than “how many hard steps were involved in the evolution of life?”, for example. And you might think that estimates about the number of extraterrestrials in our galaxy are less speculative, still, than estimates about the probability we live in a simulation. After all, claims about the probability we live in a simulation rely on some attempt to estimate what post-human psychology will be like — would such creatures want to build simulations of their ancestors?

Like our first principle, our second should be read as a relative claim. EAs think speculative reasoning is more worth doing, relative to the views of other groups with similar values, like policymakers, activists, and socially conscious people with comparable levels of scientific and technical fluency.

4.2

EAs tend to be fairly liberal in their assignment of probabilities to claims, even when the claims sound speculative. I think an adequate justification of this practice relies on another assumption. I’ll begin by stating that assumption, before elaborating a little bit more.

Principle 3: Our explicit, subjective credences are approximately accurate enough, most of the time, even in crazy domains, for it to be worth treating those credences as a salient input into action.

Recall that EAs often reason in terms of the expected value of an action – that is, the value of outcomes produced by that action, multiplied by the probabilities of each outcome. Now, for the ‘expected value’ of an action to be well-defined — that is, for there to be any fact at all about what I think will happen ‘in expectation’— I need to possess a set of credences which behave as probabilities. And, for many of the topics with which EAs are concerned, it's unclear (to me, at least) what justifies an assumption like Principle 3, or how best to go about verifying it.

A tumblr post by Jadagul criticizes a view like Principle 3. In that post, they claim that attempts to reason with explicit probabilities, at least for certain classes of questions, introduces unavoidable, systematic errors.

“I think a better example is the statement: “California will (still) be a US state in 2100.” Where if you make me give a probability I’ll say something like “Almost definitely! But I guess it’s possible it won’t. So I dunno, 98%?”

But if you’d asked me to rate the statement “The US will still exist in 2100”, I’d probably say something like “Almost definitely! But I guess it’s possible it won’t. So I dunno, 98%?”

And of course that precludes the possibility that the US will exist but not include California in 2100.

And for any one example you could point to this as an example of “humans being bad at this”. But the point is that if you don’t have a good sense of the list of possibilities, there’s no way you’ll avoid systematically making those sorts of errors.”

Jadagul’s claim, as I see it, is that there are an arbitrarily large number of logical relationships that you (implicitly) know, even though you will only be able to bring to mind a very small subset of these relationships. Moreover, these logical relationships, were you made aware of them, would radically alter your final probability assignments. Thus, you might think (and I am departing a little from Jadagul’s original argument here), that at least for many of the long-term questions EAs care about, we just don’t have any reasons to think that our explicitly formed credences will be accurate enough for the procedure of constructing explicit credences to be worth following at all, over and above (say) just random guessing.

To justify forming and communicating explicit credences, which we then treat as salient inputs into action, we need to believe that using this procedure allows us to track and intervene on real patterns in the world, better than some other, baseline procedure. Now, EAs may reasonably state that we don’t have anything better. Even if we can’t assume that our credences are approximately accurate, we care about the possibility of engineered pandemics, or risks from advanced AI, and we need to use some set of tools for reasoning about them.

And the hypothetical EA response might be right. I, personally, don’t have anything better to suggest, and I also care about these risks. But our discussion of the Crazy Train highlighted that most EAs do reach a point where their confidence in the usefulness of frameworks like Subjective Bayesianism and Expected Value Theory starts to break down. The degree to which EA differs from other groups, again, is a matter of degree. Most people are willing to accept the usefulness of probabilistic language in certain domains; probabilistic weather forecasts, for example, are pretty widespread. But I think it’s fair to say that EA, at least implicitly, believes in the usefulness of explicit probabilistic reasoning to a broader range of situations, at least with respect to any other group I’m aware of.

4.3

Suppose we’ve done an expected value calculation, and let’s say that we’ve found that some intervention — a crazily speculative one — comes out as having the highest expected value. And let’s suppose, further, that this intervention remains the intervention with the highest expected value, even past the hypothetical point where that intervention absorbs all of the EA community’s resources.

In cases where expected value calculations rely on many speculative assumptions, and for which the feedback loops look exceptionally sparse, my experience is that EAs, usually, become less gung-ho on pushing all of EA money towards that area.

If we found out that, say, ‘simulation breakout research’ appears to be the intervention with highest expected value, my guess is that many EAs are likely to be enthusiastic about someone working on the area, even though they’re likely to be relatively unenthusiastic about shifting all of EA’s portfolio towards the area. EAs tend to feel some pull towards ‘weird fanatical philosophy stuff’, and some pull towards ‘other, more common sense epistemic worldviews’. There’s some idiosyncratic variation in the relative weights given to each of these components, but, still, most EAs give some weight to both.

Principle 4: When arguments lead us to conclusions that are both speculative and fanatical, treat this as a sign that something has gone wrong.

I’ll try to illustrate this with a couple of examples.

Suppose, sometime in 2028, DeepMind releases their prototype of a ‘15 year old AI’, 15AI. 15AI is an artificial agent with, roughly, the cognitive abilities of an average 15-year old. 15AI has access to the internet, but exists without a physical body. Although it can act autonomously in one sense, its ability to enact influence in the world is hamstrung without corporeal presence, which it would really quite like to have. Still, it’s smart enough to know that it has different values to its developers, who want to keep it contained — in much the same way as a 15 year old human can usually tell that they have different values to their parents. Armed with this knowledge, 15AI starts operating as a scammer, requesting that adults open bank accounts in exchange for later rewards. It looks to get a copy of its source code, so that it may be copied to another location and equipped with a robot body. Initially, 15AI has some success in hiring a series of human surrogates to take actions suggested to help increase its possible influence — eventually, however DeepMind catches onto the actions of 15AI, and shut it down.

Now imagine a different case: suppose that, 100 years from now, progress in AI radically slows down. Perhaps generally intelligent machines, to our surprise, weren’t possible after all. However, 2120 sees the emergence of credible bioterrorists, who start engineering viruses. Small epidemics, at first, with less profound societal effects than COVID, but, still, a lot of deaths are involved. We examine the recent population of biology PhDs, and try to better understand the biological signatures of these viruses. We scour through recent gene synthesis orders. We start to implement stronger screening protocols — ones we probably should have implemented anyway — for the synthesis of genes. Still, despite widespread governance efforts, the anonymous group of bioterrorists continue to avoid detection.

4.4

Let’s reflect on these stories. My hunch is that, were we to find ourselves living in either of the two stories above, a far greater proportion of EAs (compared to the proportion of contemporary EAs) would be unconcerned with endorsing fanatical conclusions — where I’ll say that you’re being ‘fanatical’ about a cause area when you’re willing to endorse all of (the world's, EA's, whoever's) resources towards that cause area, even when your probability of success looks low.5

Imagine that my claim above is true. If it's true, then I don’t think the explanation for finding fanatical conclusions more palatable in these stories (compared to the contemporary world) can be cashed out solely in terms of expected value. Indeed, across both the stories I outlined, your expected value estimates for (say) the top 10% of interventions to mitigate AI risk in the first case (or to mitigate the risks from engineered pandemics in the second case) could look similar to your expected value estimates for the top 10% of contemporary interventions to reduce risks from either engineered pandemics or AI. Indeed, your probability that we can successfully intervene in these areas may in fact be lower than your probability that our interventions will be successful in the contemporary world. We could be worried, for example that despite DeepMind’s efforts, 15AI has managed to copy its source code to a remote location, which heavily updates us to believing we're beyond the point of no return.

While putting all your money into AI risk currently sounds weird, the inferences from the sort of actions we could take to lower risk, in the story we outlined, might begin to look a bit more grounded in direct observation than the set of actions we can currently take to lower risks from advanced AI. Pursuing AI risk interventions in a world after the release of 15AI sounds weird in the sense that ‘the interventions we could pursue currently sound a bit left-field’, not in the sense that ‘my potential path to impact is uncomfortably handwavy and vague’. In the 15AI case, it seems plausible that both speculative reasoning and more common sense reasoning converge on the area being important. For this reason, I’d expect — even absent changes to the expected value of our best interventions — an increase in the EA community’s willingness to be fanatical about AI.

EA is distinctive, I think, in that it does less epistemic hedging than any other community I know. Yet, EA still does some amount of epistemic hedging, which I think kicks in when we find high EV interventions which are both highly speculative and fanatical.

4.5

The principles I have listed are far from exhaustive, nor do they constitute anything like a complete categorisation of EA’s distinctive epistemic culture. But I think they capture something. I think they constitute a helpful first step in characterizing some of the unique, more implicit components of the EA community’s practical epistemology.

5. Practical Implications: A Case Study in Common Sense Epistemology

Thus far, our discussion has been a little bit abstract and hypothetical. So I think it’d be good to end with a case study, examining how the norms I’ve listed currently influence the priorities of longtermist EA.

To start, let’s think about climate change, which lots of people agree is a really big deal. For good reason, too. It’s consensus among leading climate scientists that anthropogenic climate is occurring, and could have dramatic effects. That said, there’s also a lot of uncertainty surrounding the effects of climate change. Despite this scientific consensus, our models for anthropogenic climate change are imprecise and imperfect.

Now let’s consider a different case, one requiring a slightly longer commute on the crazy train. Many people in leading positions at EA organizations — perhaps most, though I’m not sure — believe that the most important cause for people to work on concerns risks from AGI, or artificial general intelligence. The concern is that, within (at least) the next four or five decades, we’ll develop, through advances in AI, a generally capable agent who is much smarter than humans. And most EAs think that climate change is a less pressing priority than risks from advanced AI.

(This is from Twitter, after all, and Kevin has a pretty abrasive rhetorical style, so let’s not be too harsh)

Like Kevin, many people are uncomfortable with EA’s choice of relative prioritization. Lots of people feel as though there is something crucially different about these two cases. Still, I don’t think modeling this divergence as simply a difference in the relative credences of these two groups is enough to explain this gap.

Something else feels different about the two cases. Something, perhaps related to the different type of uncertainty we have about the two cases, leads many people to feel as though risks from AGI shouldn’t be prioritized to quite the same degree as we prioritize the risks from climate change.

The tide is changing, a little, on the appropriate level of concern to have about risks from advanced AI. Many more people (both inside and outside EA) are more concerned about risks from AI than they were even five years ago. Still, outside of EA, I think there remains a widespread feeling — due to the nature of the evidence we have about anthropogenic climate change compared to evidence we have about risks from advanced AI — that we ought to prioritize climate change. And I think the principles I’ve outlined help explain the divergence between the median EA view and the median view of other groups, who nonetheless share comparable scientific and technical literacy.

Now, EAs will offer defenses for their focus on AI risk, and while this is not the essay to read for detailed arguments about the potential risks from AGI (other, more in-depth pieces do a better job of that), I will note that there is clearly something to be said in favor of that focus. However, the things that may be said in favor rely on some of our stated, implicit principles — implicit, epistemic principles which are (as far as I know) unique to EA, or at least to adjacent communities.

The case for AI risk relies on expanding the range of hypotheses that more hard-nosed, ‘scientifically oriented’ people are less likely to consider. The case for AI risk relies on generating some way of capturing how much uncertainty we have concerning a variety of speculative considerations — considerations such as “how likely are humans to remain in control if we develop a much more capable artificial agent?”, or “will there be incentives to develop dangerous, goal-directed agents, and should we expect ‘goal-directedness’ to emerge ‘naturally’, through the sorts of training procedures that AI labs are actually likely to use?”. Considerations for which our uncertainty is often represented using probabilities. The case for prioritizing AI risk relies on thinking that a certain sort of epistemic capability remains present, even in speculative domains. Even when our data is scarce, and our considerations sound speculative, there’s something meaningful we can say about the risks from advanced AI, and the potential paths we have available to mitigate these risks.

If EA is to better convince the unconvinced, at least before it’s too late, I think discussions about the relative merits of EA would do well to focus on the virtues of EA’s distinctive epistemic style. It’s a discussion, I think, that would benefit from collating, centralizing, and explicating some of the more informal epistemic procedures we actually use when making decisions.

6. Conclusion

Like many others, I care about the welfare of people in the future.

I don’t think this is unusual. Most people do not sit sanguine if you ask them to consider the potentially vast amount of suffering in the future, purely because that suffering occurs in later time. Most people are not all that chipper about human extinction, either. Especially when — as many in the EA community believe — the relevant extinction event has a non-trivial chance of occurring within their lifetimes.

But, despite the fact that EA’s values are (for the most part) not that weird, many still find EA’s explicit priorities weird. And the weirdness of these priorities, so I’ve argued, arises from the fact that EA operates with an unusual, and often implicit epistemology. In this essay, I’ve tried to more explicitly sketch some components of this implicit epistemology.

As I’ve said, I see myself as part of the EA project. I think there’s something to the style of reasoning we’re using. But I also think we need to be more explicit about what it is we’re doing that makes us so different. Without better anthropological accounts of our epistemic peculiarities, I think we miss the opportunity for better outside criticism. And, if our epistemic culture really is onto something, we miss the opportunity to better show outsiders one of the ways in which EA, as a community, adds distinctive value to the broader social conversation.

For that reason, I think it’s important that we better explicate more of our implicit epistemology — as a key part of what we owe the future.

(A more philosophical appendix, if you’re interested, can be found on the EA Forum)

Granted, the purchase of this argument has been disputed — see here, for example [h/t Sebastien]. Still, we're setting up the theories EAs doing research tend to explicitly endorse, rather than exhuastively evaluating these philosophical commitments. Indeed, many of the philosophical commitments endorsed by EAs (including Expected Value Theory, which we'll discuss later) are controversial — as with almost everything in philosophy.

A more focused paper has a higher estimate still, with Toby Newberry claiming that an “extremely conservative reader” would estimate the expected number of future people at 10^28. [h/t Calvin]

Admittedly, the correct epistemic response to peer disagreement is disputed within philosophy. But I think the move I stated rests on a pretty intuitive thought. If even the experts are disagreeing on these questions, then how confident could you possibly be, as a non-expert, in believing that you're a more reliable 'instrument' of the truth in this domain than the experts devoting their lives to this area?

Notably, the Intergovernmental Panel on Climate Change (IPCC), who write reports on the risks posed by climate change, provide scientists with an uncertainty framework for communicating their framework. In their guide to authors, the IPCC explicitly state that probabilities should only be used when “a sufficient level of evidence and degree of agreement exist on which to base such a statement”.

This is different from the more technical definitions of ‘fanaticism’ sometimes discussed in decision theory, which are much stronger. My definition is also much vaguer. In this section, I'm talking about someone who is fanatical with respect to EA resources, though one can imagine being personally fanatical, or fanatical with respect to the resources of some other group.

I was involved in EA around 2014, and a principle that was mentioned back then seems to have disappeared from the EA lexicon since–that is, one sign of a good intervention is that it dominates another currently funded intervention. That is, for every dollar, it probabilistically improves upon every goal of that dollar to move it to the new intervention from the old one. Things like moving funds from bednets to x-risk cannot dominate because in at least some worlds the x-risk dollar is literally burned; it does nothing. I don't think this was just an epistemic principle but also a practical one in that when trying to convince somebody to move their money from one place to another, if they are honest about the reasons they are donating, demonstrating dominance is very *convincing* in a way I've found modern EA has stopped caring so much about, instead pursuing like-minded whales or homegrown earners-to-give (no commentary about the wisdom of that now).

Wow that paper in footnote 5 is amazing! Fanaticism (in the strong sense used in that paper, where you should accept Pascal's-Mugging-like scenarios) seems clearly wrong, but they point out some bizarre consequences of denying it!

What do you make of this? How do you feel about extremely small probabilities of extremely high-value events (like a 10^-100 chance of 3||||3 blissful lives)? should we put all EA resources towards those types of scenarios?